“The student becomes the teacher.”

That age-old saying is more than a feel-good trope. When learners explain a concept to someone else, they expose gaps in their own understanding and get a new angle on the material. Even a quick question like, “Can you give an example?” tells an instructor exactly where clarity is missing and how to improve the next pass at a lesson.

Great teachers power that loop every day. They scan the room for furrowed brows, listen for hesitant pauses, and mentally log each moment that hints at confusion. Over years and thousands of interactions, those micro-signals shape stronger pedagogy. But modern classrooms rarely give teachers enough time to collect every data point, especially when dozens of students need attention at once.

When Feedback Scales, Learning Compounds

Reinforcement is the same principle used to help students master new skills, and it works just as well on teachers. The more accurately an instructor can read feedback, the faster they iterate toward an explanation that sticks.

In reality, teachers juggle limited time, large rosters, and inconsistent feedback. Students do not always raise their hands to ask for another example, so the most valuable signals—confused expressions, tentative nods, silent notebooks—go unrecorded. It is no wonder that personalized instruction feels like an aspirational ideal rather than an everyday reality.

Enter AI as a Learning Partner

Large language models (LLMs) cannot yet decode a raised eyebrow, but they excel at parsing text-based exchanges. That makes them surprisingly good partners for practicing the learning-by-teaching method: have a student “teach” an AI agent, get probing follow-up questions in return, and let the dialogue surface misconceptions automatically.

At a high level, the flow looks like this:

- Student explanation: A learner walks the agent through a concept.

- Agent inquiry: The agent asks clarifying or challenging questions, simulating an eager pupil.

- Iterative refinement: The student rephrases, reaches for examples, and fills gaps—effectively coaching both the model and themselves.

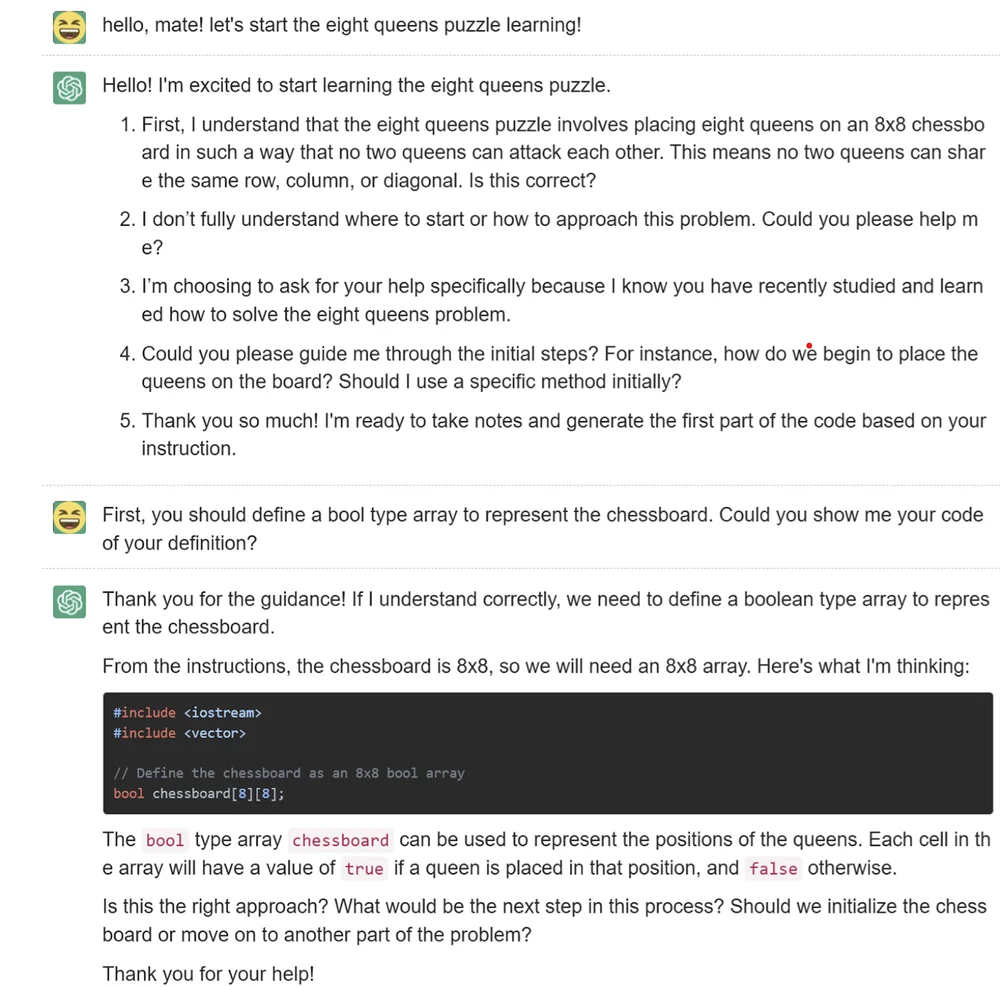

Inside the Experiment

Researchers from several Chinese universities recently put this idea to the test by asking computer science students to tackle the classic Eight Queens Problem. Half the cohort solved the puzzle independently. The other half used a “teachable agent” (TA) built on GPT-4 that could ask questions, request feedback, and mirror the cadence of a curious student.

After the coding session, both groups completed a knowledge assessment. The TA group scored statistically higher, suggesting that guiding an AI through the solution helped them retain more contextual knowledge than working solo. That is a win for learning-by-teaching, and a sign that LLMs can do more than spit out ready-made answers.

Want to read the full study? Check out “Learning by Teaching with ChatGPT” by Chen et al. (2024).

Helping AI Help You

The study’s scope was intentionally narrow—one problem, one coding language, one type of agent—but the implications are broad. If AI can nudge students to articulate their reasoning, it can serve as a scalable proxy for the kind of formative feedback teachers crave. The key is designing prompts and guardrails that prioritize learning over shortcut answers.

That is the philosophy we bring to Grassroot. Our tutors guide students to think out loud, ask targeted follow-ups, and iterate on each response. Instead of handing over a finished solution, we create the same positive feedback loop uncovered in the GPT-4 experiment, only now the “agent” is a carefully trained tutor who adapts to every learner’s strengths, misconceptions, tone, and interests.

Beyond Chess Boards and Code Blocks

Grassroot’s platform pairs each student with a responsive tutor—human or AI—who keeps learning conversational and contextual. Whether a learner is deciphering physics, anatomy, or creative writing, we analyze their explanations, adjust instruction in real time, and capture the nuances that go missing in large classrooms.

We may not read body language like a veteran educator, but we do pay close attention to the signals embedded in a student’s words, pauses, and self-checks. Those signals fuel a positive feedback loop: students teach us how to teach them, and we respond with ever-better guidance.

Ready to build your own feedback loop? Sign up for Grassroot and work with tutors who learn alongside you. Claim your spot →

Source: Chen, Angxuan, et al. "Learning by teaching with ChatGPT: The effect of teachable ChatGPT agent on programming education." British Journal of Educational Technology (2024).