Technology: an Enabler of Laziness?

In last week’s piece (Teaching AI How to Teach Better), we discussed how teachable agents in ChatGPT can facilitate knowledge acquisition in computer science students. By encouraging students to convey a complex topic to an AI agent acting as a peer, students have the opportunity to reorganize content conceptually and evaluate their knowledge blind spots. And with the rapid advancement of AI, students have more access than ever to “peer learners” that can promote the understanding of complex ideas.

Yet, greater technological sophistication also carries a tremendous downside for learners: the risk of increased dependency. The technical term to describe this observation is cognitive offloading—the shifting of one’s cognitive load (i.e., the intensity of tasks performed by their working memory) onto an external tool. Cognitive offloading is far from a new phenomenon. Decades ago, the advent of the portable calculator—much to the chagrin of many an elementary school teacher—made it easy to avoid arduous long division. Likewise, automatic spellchecking on mobile and desktop devices has all but eliminated the need for users to properly recall the correct spelling of the words they use in day-to-day communication.

But when thinking about cognitive offloading in the context of AI, we are not talking about the elimination of rote, mechanical operations such as performing long division or memorizing the spelling of complex words. With AI, cognitive offloading can occur at a more foundational level as users employ the technology to perform comparatively demanding tasks such as ideation and writing. You can read more about that in our pieces on the use of ChatGPT as a study tool (ChatGPT as a Study Tool) and how the LLM compares to competitors in the domain of college application essays (AI in College Applications).

In any case, the increasing reliance of many users on AI for more thought-intensive tasks begs the question: how does cognitive offloading through AI harm users in daily life? In a study published this year (Gerlich 2025), a researcher in Switzerland sought to answer this question by quantifying the correlation between cognitive offloading and the use of AI tools.

The Research: The Relationship between AI Usage and Critical Thinking

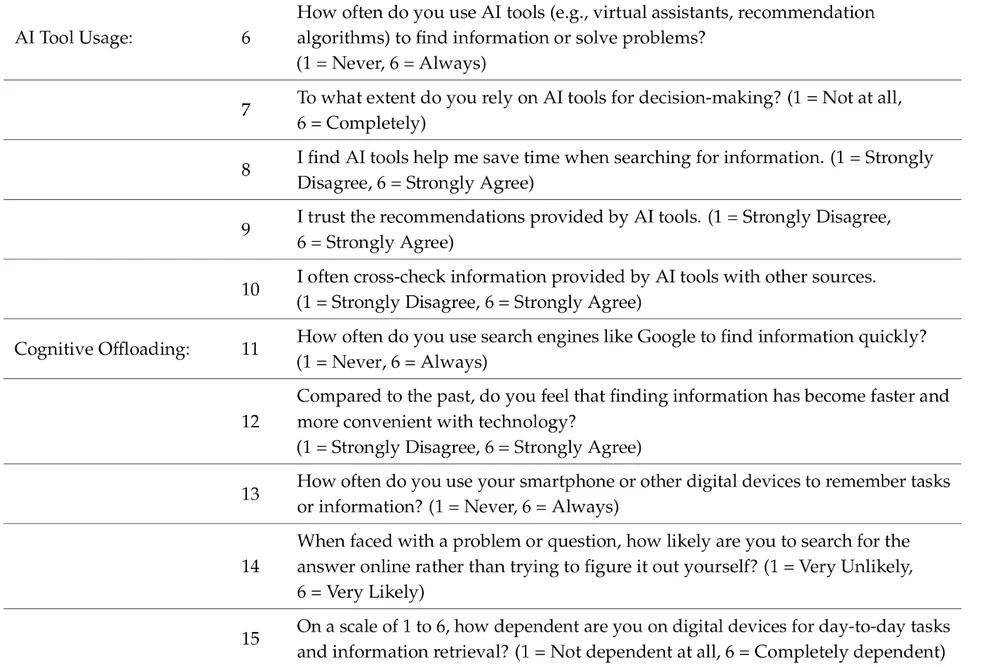

In his research, Michael Gerlich surveyed nearly seven hundred respondents of various ages, professions, and education levels to measure their correspondence to several themes, namely AI tool usage, cognitive offloading, and critical thinking. Respondents answered questions on a six-point scale, which Gerlich used to develop quantifiable scores across the three themes covered above. Below you will find the questions used to quantify AI tool usage and cognitive offloading in participants.

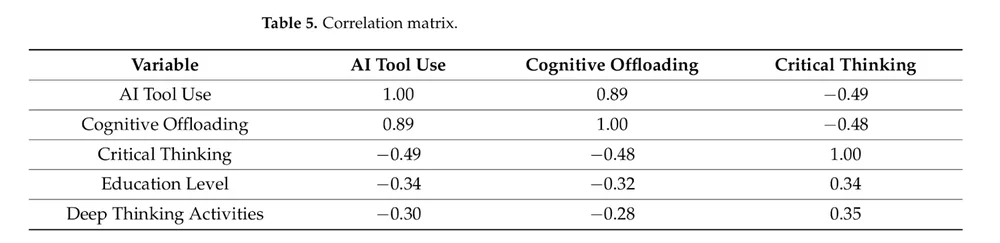

With scores aggregated across AI tool usage, cognitive offloading, and critical thinking, Gerlich then conducted correlation analysis to assess the strength of relationship between these factors, as well as with demographic information such as education level. Selected results of that analysis are shown below.

Immediately apparent is the correlative score of 0.89 between AI tool use and cognitive offloading, demonstrating a strong, positive relationship between the two. As users report greater usage of AI tools, they also report statements consistent with symptoms of cognitive offloading—almost to the same degree. Given what we discussed earlier about cognitive offloading, this should not come as a surprise.

A little less obvious is the -0.49 score between AI tool use and critical thinking—a robust, inverted relationship between the two factors. In other words, users who report greater AI usage have also exhibited thoughts consistent with decreased critical thinking. It is important to note that this study suggests a correlative—not necessarily causal—relationship between AI usage and behaviors such as reduced critical thinking, so it is not a fair characterization to say that “AI makes users dumber.” However, it is clear that those who use AI comparatively more express, in their own words, reduced critical thinking compared to their less dependent counterparts.

The research also demonstrates that across the various demographic variables recorded in users—such as education level and profession—this phenomenon had a strong correlation with age. Unsurprisingly, young people were most likely to report statements consistent with cognitive offloading and reduced critical thinking. The bad news is, considering how much the younger generation relies on AI compared to their older counterparts, such an observation does not portend a particularly positive outlook for their future development.

Guarding Against Cognitive Offloading

Though this study is not a lab experiment that reflects a causal drop in cognitive load directly due to greater AI usage, several studies are investigating that very hypothesis, such as by examining changes in gray matter volume over time. While such research is better suited for discussion in another piece, the age component uncovered in this study cannot be overstated; if young people are especially vulnerable to decreased critical thinking, we must strive to reverse course as quickly as possible because the prefrontal cortex—the part of our brains responsible for reasoning and higher-order thought—reaches maturity in one’s twenties. That is, even though neuroplasticity—the property of the brain to change and adapt in response to learning—is observed throughout one’s life, the closing of the critical period of development in early adulthood can make the formation of good study habits—and the undoing of bad ones—much more difficult.

This is why we at Grassroot take great care in studying how the AI in our tutors performs, with feedback from real students and educators used to refine the learning experience to become as engaging and fruitful as possible. This means employing guardrails that prevent the output of easy answers and instead, encouraging critical thinking processes in users. Check out the image below, taken from a live learning session in our Introduction to Investing course.

Grassroot’s tutors are guides and supporters—not a free pass to cheat on homework. But our commitment to promoting critical thinking in students goes beyond this relatively simple prevention of sharing straight answers. Because our tutors learn from students (Teaching AI How to Teach Better) just as they learn from us, we are able to predict the areas in which each unique user struggles and help them learn in a way that makes the most sense to them. With Grassroot, we have developed an experience that allows students to use AI not as a crutch or even a tool, but rather, a thought partner, giving them the best chance possible to stay ahead in this era of AI (Staying Ahead with AI).

So try Grassroot out now and see what you think—but be warned; you might do more learning than you expect. Start for free →

Source: Gerlich, Michael. "AI tools in society: Impacts on cognitive offloading and the future of critical thinking." Societies 15.1 (2025): 6.