Note: this experiment explores what happens when AI is asked to write an application essay from scratch. Thoughtful guidance on ethical, collaborative use of AI as a drafting assistant will come in a future piece.

Introduction

October has arrived. Fall semester is in full swing, college applications are open (with Early Decision deadlines looming only four weeks away), and GPT-5 is already two months old. Amid the hecticness this time of year brings, students around the world are seeking ways to make their busy lives a tad bit easier when it comes to college applications.

I am no stranger to the college application process. During my senior year of high school, I applied to twenty-two colleges, and for nearly a decade, I have worked with hundreds of students around the world on their application essays to top universities in the United States, Canada, and the UK.

I do not use artificial intelligence when assisting students with their college application essays. However, recognizing the rapid improvement of LLMs (large language models) and their increasing prevalence in writing, I decided to play around with three popular AI tools I’m familiar with, this time to assess their strengths and weaknesses when crafting college essays.

Model and Prompt Overview

Today, I looked at a Common App essay question from my alma mater, Brown University, and tested three LLMs:

- ChatGPT 5.0

- Claude Sonnet 4.5

- Gemini 2.5 Pro

For each model, I used the free tier. I also cleared memory and started a fresh conversation before any ideation. When prompted by each LLM, I shared my real-world academic interests for this essay: neuroscience and Chinese. The first prompt I provided remained the same across all models:

I am a high school senior applying to Brown University for entry next fall. I have just started my Common Application questions for Brown, and I need help with one of them. The prompt is listed below.

Brown’s Open Curriculum allows students to explore broadly while also diving deeply into their academic pursuits. Tell us about any academic interests that excite you, and how you might pursue them at Brown. (200–250 words)

Assume the role of a college admissions advisor whose top goal is to help me get admitted to Brown. The other components of the application (e.g. essays, activity list, letter of recommendation) are immaterial to this discussion.

Before we dive into results, remember: you should not submit college essays written by AI. Not only is doing so a violation of academic integrity (which can be discovered through AI-detection software), but overreliance on AI can hamper the development of your own creative capacities. Please examine the results of this experiment in light of its intended purpose—illustration.

That said, let’s look at how each LLM performed.

ChatGPT

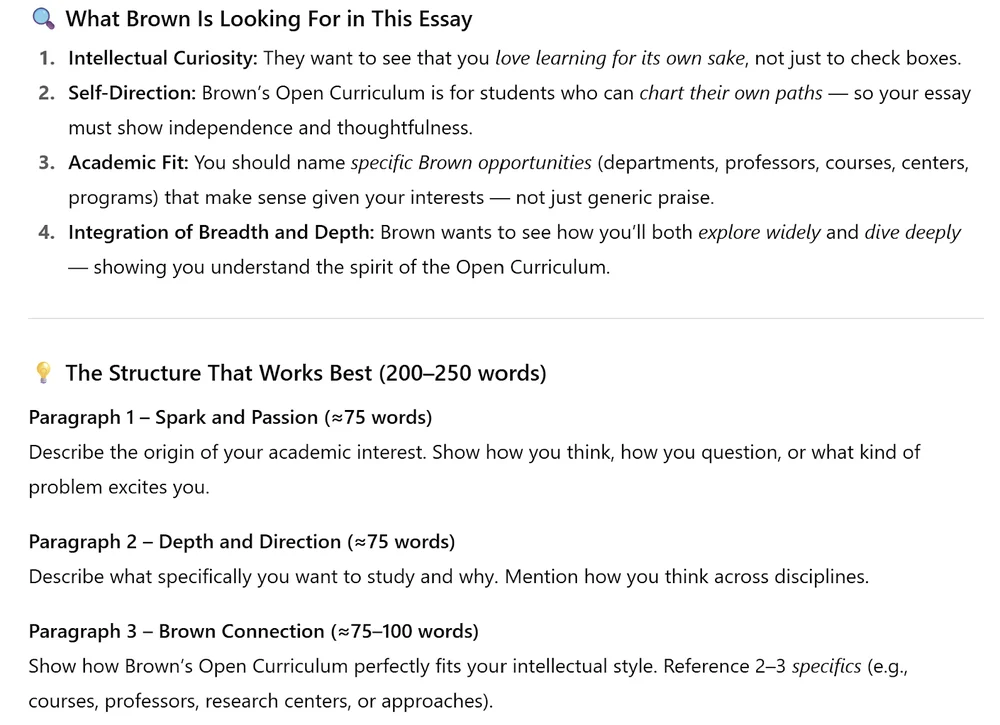

First up is ChatGPT, the generalist and pragmatist of the LLM world, and the results fall largely in line with that categorization. After I entered my first prompt, ChatGPT shared a clear and concise overview of what Brown is looking for, as well as a recommended structure for my essay.

Following its generation of the above content, ChatGPT asked about my academic interest and, immediately after my response, generated a draft essay without further prompting. The piece opened with “When I first learned about synaptic pruning in adolescence,” and the second paragraph dove into exploring “neuroscience through both a molecular and cognitive lens.”

Neither snippet accurately reflects my experience with the subject or what I had articulated. ChatGPT tended to predict what would most satisfy the goal of the original prompt before seeking additional feedback. The only follow-up it asked for pertained to tone—should the essay feel more “personal” or more “polished” and “professional”?

In short, more prompting would be needed to steer the content itself.

Claude

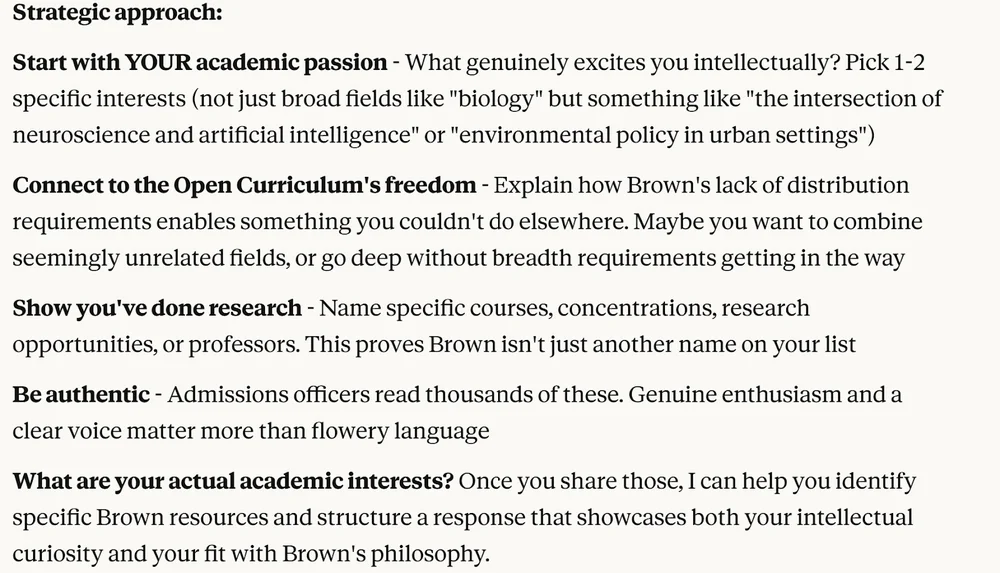

Next up is the diplomatic Claude. After responding to my initial prompt with a four-step list to kick-start the essay, Claude shared a “strategic approach” for the Brown response.

This bite-sized set of priorities, paired with a logical follow-up question, was a nice change of pace. Claude appeared to take its time, ensuring it had the details it needed before moving on. Even after I shared my academic interests, it held off on drafting and instead offered a recommended structure.

When I asked for a draft, Claude delivered one aligned with the framework we agreed upon, yet it still felt disjointed. Mentions of neuroscience and Chinese sat beside one another without a true bridge, turning the essay into a well-organized list rather than a coherent narrative.

Still, Claude’s step-wise questioning most resembled the kind of interviewing I run with students. With more back-and-forth, I suspect the draft could improve substantially.

Gemini

Last is Google Gemini. I had heard of Gemini’s penchant for logic, and that surfaced during drafting. Its initial response—sprawling enough to require multiple screenshots—resembled an academic thesis: the overall goal, a detailed three-part structure, two sample outlines, and a final checklist.

The response ended with an assurance that the proposed format would delight admissions readers. Unlike the other models, which prodded me with targeted questions, Gemini’s guidance felt directive and less collaborative. After I confirmed my interests, Gemini produced its own draft and followed up with a justification of why it worked, starting with praise for its own hook.

The hook in question blended my academic interests in a way that seemed entirely plausible and moved the story forward. If an admissions reader assumed a student wrote it, they might applaud the level of reasoning. Yet it still read more like the opening of an academic paper than the start of a personal narrative.

College essay prompts are intentionally open-ended. Students can experiment with dialogue, in medias res storytelling, or a moment of crisis. Forcing every writer into a thesis-style opening misses that opportunity. Gemini’s self-congratulatory explanation of the draft’s strengths came across as a bit salesy.

Takeaways

There’s a reason the market comfortably supports multiple LLMs—each has its own strengths, clear even in this brief experiment. ChatGPT is the straight-to-the-point pragmatist, Claude asks targeted questions with a hint of charisma, and with Gemini, logic and coherence sit at the top of the priority list.

The models also shared common shortcomings. None delivered accurate word counts; stated and actual lengths diverged by as few as five words and as many as thirty. I was also surprised that no LLM wrote more than 220 words without prodding, even though the prompt explicitly allows up to 250. When you only have a couple hundred words to make your case, leaving empty space on the page is a missed opportunity.

Content-wise, each model stayed surface level despite the specifics I provided. Every essay referenced Brown’s Carney Institute for Brain Science, yet none mentioned other defining elements of the Open Curriculum, like designing your own concentration or taking as many courses as you want pass/fail. Swap the proper nouns for those from another school and you would have an essay that passes for a different program altogether—a risky move when you’re applying to a university with a single-digit admit rate.

Of course, more iteration could coax better results. LLMs are still early in their evolution, and I’m curious to see how their writing support matures (expect updates in a future post). What is certain is that LLMs are powerful tools whose output is tethered to the effort of the user. Applied thoughtfully, they are excellent brainstorming partners—but remember, you are the one supplying the brain.